Building a Write-Optimized Database Engine: The Memtable (Part 2)

A deep dive into the architecture and implementation details of Memtables.

In our previous issue, we explored the high-level architecture of Log-Structured Merge Trees — a log-structured storage framework. We also compared their characteristics with those of traditional B-tree-based storage engines. I would suggest going through the previous issue before you continue with this one to ensure you understand what’s going on.

In this issue, we’ll focus on designing and implementing a core component of the LSM Tree that allows it to have exceptionally fast writes - the memtable.

We will be using Go to implement this LSM Tree and the complete code that we will be working towards is available at github.com/JyotinderSingh/goLSM.

The Read API

To enable a streamlined design of the interactions between the LSM tree and its storage components, we aim to establish a similar API surface for both the Memtable and the SSTable. Since both structures serve the primary purpose of storing similar types of information, aligning their interfaces as closely as possible simplifies the overall system architecture.

However, it's important to recognize that each component will possess unique methods reflective of their distinct roles—for instance, the SSTable, being immutable, will not include a Put method. Likewise, the Memtable will not support compaction.

The critical operation common to both components is data retrieval. To accommodate this, both the Memtable and the SSTable will support the following API methods:

Get(key string) *LSMEntry: Retrieves the value associated with a given key from the Memtable/SSTable.RangeScan(startKey string, endKey string) []*LSMEntry: Conducts a range scan on the Memtable/SSTable, covering all entries from startKey to endKey inclusive.

Achieving completely identical function signatures for both components is difficult, particularly because Memtable operations do not typically result in errors during reads, whereas SSTables, interacting with disk-based data, may encounter issues such as data corruption. Despite these differences, our goal is to maintain as consistent an interface as possible. To achieve this, one approach could be to have the Memtable return a 'dummy' nil error in its API responses, thus aligning more closely with the SSTable’s interface where error handling is a necessary consideration (however, I won’t be worrying too much about that in my implementation).

LSMEntry

The LSMEntry represents a single entry in the LSM Tree. It contains the key associated with the entry, the operation being performed on it, and other auxiliary information. It is defined as a protobuf message that uses protocol buffers for its efficient serialization and deserialization, as well as type safety.

message LSMEntry {

string key = 1;

Command command = 2;

optional bytes value = 3;

int64 timestamp = 4;

}This structure encapsulates the key and value pair, along with additional metadata such as a command indicating the operation (e.g., insert, delete) and a timestamp for versioning purposes (which we will go over in later sections).

A Quick Memtable Recap

The memtable serves as the LSM Tree's in-memory buffer, playing an important role in enabling high write-throughput. This is primarily because writes are first buffered in RAM, allowing for quick data ingestion, before they are eventually flushed to disk.

We previously discussed that data within the memtable is maintained in sorted order. A key rationale for this approach was to ensure that, upon being flushed to disk, the data remains in sorted order. Maintaining data in sorted order as it arrives proves to be more efficient than sorting it at the time of disk flushing, which, in turn, accelerates the disk flush process and improves the durability of the data in the system.

However, another primary reason for maintaining data in sorted order is to enable efficient range scans. Range scans are queries that request a sequence of records, and they are only efficient if the queried data is pre-sorted. Without sorted order, you would be forced to iterate through every entry in the system, significantly impacting performance. This principle holds for data in both on-disk and in-memory structures. Moreover, having all data in sorted order simplifies merging outputs from range scans across both the in-memory and on-disk representations.

Data Storage using SkipLists

With this information in mind, a straightforward choice for the underlying data structure for the memtable would be a sorted tree, often a Red-Black tree. However, there is another data structure that systems like Cassandra or RocksDB (configurable) use for their in-memory representations - Skip Lists.

Skip Lists are probabilistic data structures that allow for an average-case complexity of O(log n) for search, insert, delete, and update operations, similar to balanced trees, but with a simpler design and implementation. Unlike balanced trees, which require complex restructuring during inserts and deletes, Skip Lists achieve their efficiency through layers of linked lists that skip over a subset of the elements. This enables fast searches, similar to binary search in sorted arrays, but adds flexibility for efficient insertions and deletions.

Skip lists naturally support efficient concurrent read and write operations, making them suitable for multi-threaded environments. Implementing concurrency in balanced binary trees is more complex and often requires additional locking or intricate designs to avoid performance bottlenecks. I won’t delve into Skip Lists' design and underlying implementation details since that is irrelevant to this material. There already is literature out there that discusses this topic quite well.1

While I will be using a skip list as the data structure of choice to implement the Memtable, you, however, are free to use a sorted tree implementation if you prefer.

I will be using github.com/huandu/skiplist as the implementation of choice. The clarity and quality of this code make it an excellent reference, and I recommend taking some time to go through it. This is not a thread-safe implementation, so we will be resorting to crude locking during reads and writes.

Memtable Data Structure Design

The Memtable struct needs only two fields. The first field is the storage data structure, which in this case is a SkipList.

The second field is an integer that tracks the current size of the Memtable in bytes. By monitoring the Memtable's size, we can determine when we need to flush its contents to disk.

Multi-Memtable Support

It is important to note that our Memtable implementation is intentionally not thread-safe. At first glance, this decision might appear counterintuitive to some, as one could argue that encapsulating concurrency management within the Memtable itself could simplify usage by abstracting away the complexities of concurrent access. However, this approach aligns with a broader architectural strategy within our LSM Tree.

The LSM Tree will be designed to manage multiple Memtables simultaneously. This design facilitates a higher degree of parallelism by allowing the system to "freeze" Memtables that have reached their capacity and are pending disk flush, while simultaneously allocating new Memtables to handle incoming writes. This multi-Memtable strategy necessitates synchronized access across all Memtables to ensure data consistency and integrity.

Implementing thread safety at the Memtable level would lead to a redundant layer of locks, potentially doubling the synchronization overhead and adversely affecting system performance. By moving the concurrency control up a level to the LSM Tree, we can efficiently manage concurrent access to multiple Memtables.

To interact with the Memtable, we define an interface that allows clients - primarily the LSM Tree - to efficiently query and modify its state. In addition to the API discussed above, we define the following methods for the Memtable interface:

Put(key string, value []byte):Inserts a key-value pair into the Memtable.Delete(key string):Marks a key-value pair as deleted in the Memtable.SizeInBytes() int64:Gets the size of the Memtable in bytes.GetEntries() []*LSMEntry:Produces a serializable list of Memtable entries in sorted order, primarily for SSTable generation.

Put

The Put method performs two functions. Firstly, it performs an upsert operation for a key-value (KV) pair. This means that if the specified key does not currently exist within the Memtable, the method inserts the new KV pair. Conversely, if the key already exists, the method updates the associated value with the new one provided.

Secondly, it tracks the change in the total byte size of the Memtable resulting from the upsert operation. For inserts, this process is straightforward: the sizes of both the key and the value are added to the Memtable's total size. Updates, however, are a little nuanced. Since an update involves replacing an existing value with a new one, the method must first subtract the byte size of the old value before adding the size of the new value.

Delete

Contrary to what one might expect, the Delete method in the Memtable does not simply reverse the operation performed by the Put method. Instead of removing a key-value pair, it introduces a special type of entry known as a tombstone. A tombstone is essentially a marker that indicates the deletion of a key. This is implemented by inserting a key into the Memtable with no associated value and setting the Command enum of the LSMEntry to Delete.

The rationale behind using tombstone entries rather than outright deletion is based on the overall storage architecture of our LSM Tree. Direct deletion from the active Memtable might suffice if the key-value pair exists solely in memory in the currently active memtable. However, if the key-value pair is also present in any of the frozen Memtables awaiting flush or within the on-disk SSTables, these instances would not be updated to reflect the deletion. Consequently, a query for this key could return outdated data from these components. Tombstones address this issue by marking the key as deleted across all instances. When the LSM Tree encounters a tombstone during a read operation, it recognizes that the key has been deleted and returns a nil value to the user, effectively preventing the retrieval of deleted data.

However, the use of tombstones introduces write amplification, particularly when numerous tombstone entries accumulate on disk. These entries, despite representing deleted data, continue to consume valuable disk space. To mitigate this, tombstones are purged during the process of SSTable compaction. This cleanup step involves removing any occurrences of the key that predate the tombstone, thereby reclaiming disk space and reducing write amplification.

Get

The Get method is straightforward. It searches for the requested key within the storage structure, which, in our implementation, is a SkipList. Whatever the returned result maybe - be it a valid key-value pair or a tombstone entry - is returned directly.

We delegate the responsibility of interpreting and handling these tombstones to the LSM Tree itself. This design choice ensures that the Memtable remains focused on its core function of efficient data retrieval, while the LSM Tree manages the broader context of how data, including deletions represented by tombstones, should be handled in response to user queries.

Range Scan

The RangeScan method leverages the underlying Find API of the SkipList to locate the starting point of the search range, identified by the startKey. The method then proceeds to iterate over subsequent elements in the SkipList. This loop continues until the method encounters a key that exceeds the endKey, signalling the end of the specified range. The method exits the loop and returns the slice of the collected results.

SizeInBytes

This is a simple method that just returns the size field of the Memtable.

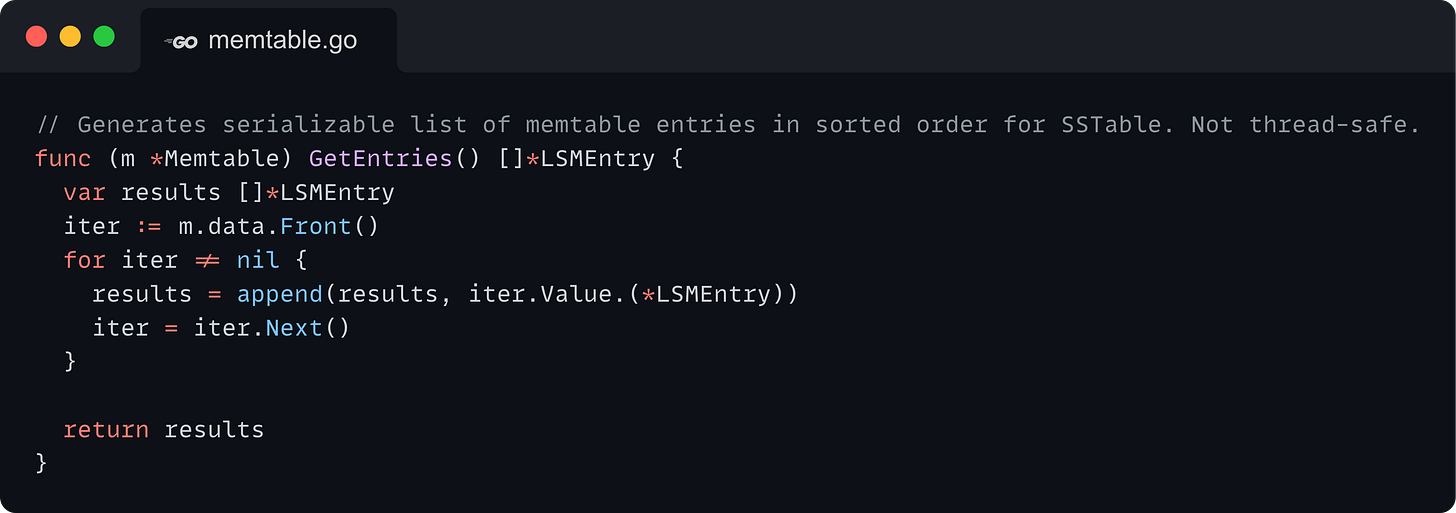

GetEntries

The GetEntries method allows LSM Tree to retrieve all the KV pairs stored in the Memtable in their sorted order. This is used to serialize the Memtable's data into an SSTable format, a topic we will explore in greater depth in upcoming issues.

It's worth considering, however, that directly utilizing the GetEntries method for this purpose might not always be the most memory-efficient approach. An alternative strategy involves the creation of an iterator to traverse the Memtable's elements sequentially. Such an iterator would significantly reduce memory overhead by eliminating the need to duplicate the Memtable's contents into a separate slice for serialization.

This consideration of memory efficiency becomes even more critical when dealing with SSTables, which can grow substantially larger than Memtables. Accordingly, we will implement a similar iterator mechanism for SSTables, allowing us to handle larger entry-sets. Readers are encouraged to explore the development of this iterator for the Memtable in their implementations.

Up Next

In this issue, we explored how to design a Memtable implementation for the LSM Tree, keeping in mind the overarching architectural considerations of the system.

In the upcoming issues, we will dive deeper into persistent storage as we explore the design and implementation of SSTables - an efficient on-disk data structure to persist the data currently residing in the memtable.

Finally, we will bring all these elements together to implement a fast and efficient storage engine.

https://15721.courses.cs.cmu.edu/spring2018/papers/08-oltpindexes1/pugh-skiplists-cacm1990.pdf

https://www.cs.cmu.edu/~ckingsf/bioinfo-lectures/skiplists.pdf

![// Insert a key-value pair into the Memtable. Not thread-safe. func (m *Memtable) Put(key string, value []byte) { sizeChange := int64(len(value)) existingEntry := m.data.Get(key) if existingEntry != nil { // If the entry already exists, we need to account for the size of the // old value. m.size -= int64(len(existingEntry.Value.(*LSMEntry).Value)) } else { // If the entry doesn't exist, we need to account for the size of the key. sizeChange += int64(len((key))) } // Update with the new entry. entry := getLSMEntry(key, &value, Command_PUT) m.data.Set(key, entry) m.size += sizeChange } // Insert a key-value pair into the Memtable. Not thread-safe. func (m *Memtable) Put(key string, value []byte) { sizeChange := int64(len(value)) existingEntry := m.data.Get(key) if existingEntry != nil { // If the entry already exists, we need to account for the size of the // old value. m.size -= int64(len(existingEntry.Value.(*LSMEntry).Value)) } else { // If the entry doesn't exist, we need to account for the size of the key. sizeChange += int64(len((key))) } // Update with the new entry. entry := getLSMEntry(key, &value, Command_PUT) m.data.Set(key, entry) m.size += sizeChange }](https://substackcdn.com/image/fetch/$s_!BMyz!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2Ff870356c-aaff-4579-950d-cf896979febd_2963x1860.png)

![// Range scan the Memtable, inclusive of startKey and endKey. Not thread-safe. func (m *Memtable) RangeScan(startKey string, endKey string) []*LSMEntry { var results []*LSMEntry // Find the first key that is >= startKey and use the FindNext() method to // iterate through the rest of the keys. iter := m.data.Find(startKey) for iter != nil { if iter.Element().Key().(string) > endKey { break } // We need to include the tombstones in the range scan. The caller will // need to check the Command field of the LSMEntry to determine if // the entry is a tombstone. results = append(results, iter.Value.(*LSMEntry)) iter = iter.Next() } return results } // Range scan the Memtable, inclusive of startKey and endKey. Not thread-safe. func (m *Memtable) RangeScan(startKey string, endKey string) []*LSMEntry { var results []*LSMEntry // Find the first key that is >= startKey and use the FindNext() method to // iterate through the rest of the keys. iter := m.data.Find(startKey) for iter != nil { if iter.Element().Key().(string) > endKey { break } // We need to include the tombstones in the range scan. The caller will // need to check the Command field of the LSMEntry to determine if // the entry is a tombstone. results = append(results, iter.Value.(*LSMEntry)) iter = iter.Next() } return results }](https://substackcdn.com/image/fetch/$s_!l4jm!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F2a980260-b23e-4196-9d55-13ba3f59b1da_2963x2112.png)